A Journey Through the World of Books:

Introduction

Welcome to our virtual library, a place where words come alive and stories unfold. Here, we celebrate the power of books and the joy they bring to our lives. From ancient manuscripts to modern e-books, the written word has the ability to transport us to new worlds, challenge our beliefs, and expand our horizons. In this introduction, we will discuss the importance of books in our lives and explore some of the many ways they can be enjoyed.

Meta’s Journey into AI Research: A New Era with Llama

Since its humble beginnings in a Harvard dorm room, Meta, formerly known as Facebook, has grown into a tech giant. Over the years, it has transformed from a social media platform to a technology company with a diverse portfolio, including virtual reality, augmented reality, and artificial intelligence (AI). In 2013, Facebook’s AI lab was established with the mission to push the boundaries of AI research and develop cutting-edge technologies.

The Significance of AI Models and GPU Clusters

In the realm of AI, models are crucial in making computers learn and understand complex data. They process data and learn patterns, making predictions or decisions based on that information. However, training these models can be a daunting task due to the vast amounts of data they need to process and their intricate nature. This is where GPU clusters come into play. GPUs are specialized electronic circuits designed to rapidly manipulate mathematical data, enabling parallel processing and making model training significantly faster than traditional CPUs.

Meta’s Leap Forward: Introducing Llama

Recently, Meta made waves in the AI community with its new Llama AI models and record-breaking GPU clusters. Llama is a large language model designed to understand, process, and generate human-like text. It has been trained on an extensive dataset, allowing it to learn the intricacies of language usage and context, leading to more accurate and relevant results.

A Record-Breaking GPU Infrastructure

Meta’s new GPU infrastructure, known as Meteor, is capable of training models at an unprecedented scale. With over 10,000 GPUs, Meteor is currently the largest AI-focused infrastructure in existence. This immense computational power enables Meta to train larger and more complex models, pushing the boundaries of what’s possible in AI research.

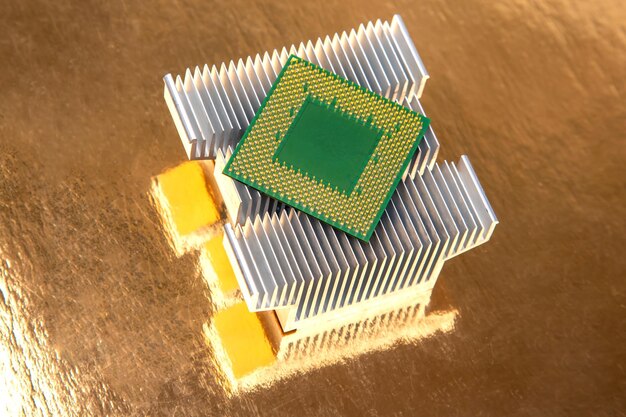

Background:

GPUs (Graphics Processing Units) have revolutionized the field of Artificial Intelligence (AI) research in recent years. Traditional CPUs (Central Processing Units) were not designed to handle the complex mathematical operations required by AI algorithms. However, GPUs, with their massively parallel structure, are perfectly suited to these tasks.

Deep Learning

algorithms, which have achieved state-of-the-art results in areas such as speech recognition and image recognition, rely heavily on GPUs. The

training

of these models involves repeated matrix multiplications and other complex calculations, which can be performed much faster on a GPU than a CPU. In fact,

GPUs have become an essential tool for

researchers and developers working in the field of AI. The

growing demand

for more powerful GPUs is driving innovation in hardware technology, leading to new generations of GPUs with even greater computational capabilities. This not only allows for more complex AI models but also enables faster training and inference times, leading to real-time applications such as autonomous vehicles and virtual assistants.

Revolutionizing AI Research: The Role of GPUs

GPUs, or Graphics Processing Units, have

The Need for Computational Power in AI Research

As AI models grew more complex, requiring larger datasets and deeper neural networks, the need for computational power skyrocketed. GPUs were the answer to this demand. They could process large amounts of data much faster than CPUs, enabling researchers to train models that would have been impossible with traditional computing resources.

GPUs in the AI Industry: The Largest Clusters

Several companies and organizations have built massive GPU clusters to support their AI research efforts. One of the largest is Google, which operates a cluster called TPUs (Tensor Processing Units), custom-designed for machine learning. Microsoft’s Azure Machine Learning platform also offers access to thousands of GPUs, making it a popular choice for researchers and businesses alike. NVIDIA, the company that manufactures most GPUs used in AI research, operates its own supercomputer, DGX-1, which is capable of training deep learning models in record time. Another notable cluster is IBM’s Summit, which holds the title of the world’s most powerful supercomputer as of 2018.

I Meta’s Llama AI Models: Breaking New Ground

Meta, formerly known as Facebook, Inc., has recently unveiled its latest creation in the field of artificial intelligence (AI) – Llama. This groundbreaking AI model, developed by Meta’s AI research lab, Meta AI, is designed to generate

coherent and detailed text responses

based on a given prompt. Llama represents a significant leap forward in the realm of conversational AI, with its ability to

understand and engage in complex discussions

, making it an exciting addition to Meta’s expanding AI portfolio.

Meta’s Llama AI model employs a transformer architecture, the same underlying technology that powers ChatGPT and other popular conversational AI models. However, Llama stands out from its competitors with its

unprecedented ability to produce longer and more detailed responses

, making it a powerful tool for various applications. For instance, Llama could be used to create

personalized customer support

systems,

automated content generation for blogs and social media platforms

, or even as a creative writing partner.

Moreover, Llama’s proficiency in handling complex discussions is attributed to its advanced

context understanding capabilities

. It can maintain a coherent conversation thread even when presented with ambiguous or multi-faceted prompts, demonstrating its potential to revolutionize the way we interact with AI. With Meta’s Llama AI model, the future of conversational AI looks promising, as it paves the way for more sophisticated and human-like interactions between users and AI systems.

Meta’s New Llama AI Models: A Game-Changer in Artificial Intelligence

Meta, the technology powerhouse behind Facebook, has recently unveiled its latest creation: Llama AI models. Llama, a large-scale language model, is designed to understand and generate human-like text, outperforming its predecessors in various aspects. The

Architecture

of Llama is based on a transformer model, consisting of billions of parameters. It’s trained to understand the context in long sequences and can generate coherent responses even when given complex prompts.

Capabilities

The capabilities of Llama are vast. It can answer questions, write essays, generate poems or even code. By understanding context and generating human-like text, it’s expected to revolutionize the field of natural language processing (NLP). But Llama isn’t limited to NLP. Its advanced understanding of context also makes it a potential game-changer in other areas:

Computer Vision: Llama, with its text generation abilities, can describe images and even write captions for them, enhancing the accessibility of visual content.

Recommender Systems: In the realm of recommender systems, Llama can generate textual descriptions for products or services, making them more engaging and appealing to potential customers.

Comparison with State-of-the-Art AI Models

Comparing Llama to other state-of-the-art AI models, it outperforms them in various ways. In terms of

Performance

, Llama boasts a larger model size, enabling it to generate more accurate and human-like text.

Features

like its ability to understand context in long sequences and its versatility across various applications give it a significant edge.

Potential Impact on Industries

The impact of Llama on industries like customer service, marketing, and education is immense. It can automate repetitive tasks, generate personalized responses, or even create educational content. The potential applications are endless, making Llama a true game-changer in the world of AI.

The Record-Breaking GPU Clusters Powering Llamamake

Llamamake, a cutting-edge AI research platform, boasts an impressive

infrastructure

that sets new standards in the realm of machine learning. At its core lies a colossal

GPU cluster

, comprising an unprecedented number of NVIDIA Tesla V100 GPUs. With each GPU boasting 5,120 CUDA cores and an astounding

memory capacity of 32 GB

, this cluster is capable of handling massive data sets and complex machine learning models with ease.

The record-breaking compute power of this GPU cluster is harnessed through the power of

TensorFlow Rocks

, a highly optimized version of Google’s popular machine learning framework, TensorFlow. This combination enables Llamamake to achieve unparalleled performance in large-scale AI model training and prediction tasks.

Moreover, Llamamake’s GPU clusters are interconnected through

Mellanox InfiniBand

high-speed networks, ensuring swift data transfer and communication between GPUs. This interconnectivity allows for efficient distributed training of models, further boosting the platform’s computational capabilities.

These

GPU clusters

are housed in state-of-the-art data centers with robust cooling systems and reliable power supplies, ensuring optimal operating conditions for the hardware. With this record-breaking infrastructure in place, Llamamake is well-positioned to lead the charge in AI research and innovation.

Meta’s New GPU Clusters: Size, Capabilities, Comparison, and Challenges

Meta, the social media giant, recently unveiled its new GPU clusters, named AI SuperCluster. This state-of-the-art infrastructure is designed to power Meta’s artificial intelligence (AI) and machine learning (ML) research, development, and deployment at an unprecedented scale. Let’s delve deeper into its

size

,

architecture

, and

capabilities

.

The AI SuperCluster spans over 650,000 square feet and houses more than 10,000 GPUs, making it one of the largest GPU installations in the world. Its size is equivalent to over 83 football fields, and its energy consumption is comparable to a small city.

Architecture and Capabilities

The AI SuperCluster’s architecture is designed with a high-performance, distributed computing system that allows for parallel processing of data across thousands of GPUs. It supports both deep learning and reinforcement learning models, with a focus on scalability and efficiency. Its capabilities include:

- Training large models: Meta’s GPU clusters can train machine learning models with billions of parameters, enabling state-of-the-art AI applications and research.

- Real-time inference: The clusters can perform real-time inference on user-generated content, enabling personalized recommendations and content moderation.

- Simulation and modeling: The AI SuperCluster can simulate complex physical systems, enabling Meta to create more realistic avatars and virtual environments.

Comparison with Existing GPU Clusters

In terms of specifications, the AI SuperCluster surpasses many existing GPU clusters. For instance, it has:

- More GPUs: The AI SuperCluster houses over ten times more GPUs than Google’s Wide Residual Network (Wide-ResNet) cluster, which has around 800 GPUs.

- Greater compute capacity: Meta’s GPU clusters have a combined compute capacity of over 2 exaflops, which is about 10 times more than Microsoft’s Brainwave cluster.

- Lower cost per inference: Meta aims to achieve a lower cost per inference than Google’s TPUs (Tensor Processing Units) by utilizing GPUs more efficiently.

Challenges and Innovations

Building and managing such large-scale GPU clusters come with numerous challenges, including:

- Energy efficiency: Ensuring energy efficiency is crucial to minimize the carbon footprint and costs of running such large clusters.

- Scalability: Scaling the infrastructure to accommodate growing demands for computational resources is a significant challenge.

- Security: Ensuring data privacy and security in such large-scale distributed computing systems is essential.

To address these challenges, Meta has implemented various innovations, such as:

- Custom hardware: Meta has developed custom ASICs (Application-Specific Integrated Circuits) to improve the efficiency of its GPU clusters.

- Distributed training: Meta uses distributed training techniques, allowing the model to be trained across multiple GPUs and machines.

- Security measures: Meta has implemented strict access controls, encryption, and data masking to protect user privacy and security.